Podarcis bocagei, AND P. LUSITANICUS

|

publication ID |

https://doi.org/ 10.1093/zoolinnean/zlac087 |

|

DOI |

https://doi.org/10.5281/zenodo.7926984 |

|

persistent identifier |

https://treatment.plazi.org/id/03A087FF-FFF0-5753-5166-B397C381F9AF |

|

treatment provided by |

Plazi |

|

scientific name |

Podarcis bocagei |

| status |

|

DISCRIMINATION BETWEEN PODARCIS BOCAGEI AND P. LUSITANICUS

The overall performance of the three methods for image classification of P. bocagei and P. lusitanicus in the four different datasets is shown in Table 2 View Table 2 . Detailed results, including training, validation and test-set evaluation for all cross-validation sets, are shown in the Supporting Information, Tables S1–S View Table 1 4 View Table 4 . Accuracy is generally high, ranging from 87.3% in the case of InceptionV 3 in female dorsal images to 94.8% in male dorsal images when applying InceptionResNetV2. AUC ranges from 0.931 using InceptionV 3 in female dorsal images to 0.984 using Inception-ResNetV 2 in male dorsal and head lateral images. F1-scores show that, typically, P. lusitanicus is more frequently misclassified than P. bocagei , for both types of images and for both sexes. All three methods perform similarly in all datasets considering the three performance metrics. Identification of males is generally more accurate than that of females. Considering all five cross-validation replicates of the three models, the identification accuracy of males is significantly higher than that of females only when considering dorsal images (P = 0.048, Mann–Whitney–Wilcoxon test). The same result is obtained, but even more pronounced, using other metrics (P = 0.009 and P = 0.030 for AUC and F1-scores, respectively). With respect to head lateral images, the difference in identification accuracy between sexes also exists but it is significant only for differences in AUC (P = 0.046, Mann–Whitney– Wilcoxon test). There is no difference in performance using different image perspectives, neither in the case of males nor in that of females.

As an extension to this basic approach, we tested whether model ensembles (calculated by averaging predictions of different models) would increase classification success. Model ensembles within each of the four datasets do not always improve classification success compared to the best single model (see results in Table 2 View Table 2 ). For instance, in the case of head lateral images, prediction performance is worse with the model ensemble than when using the best-performing model alone. In the case of dorsal images, the improvement is slight for males and more substantial for females.

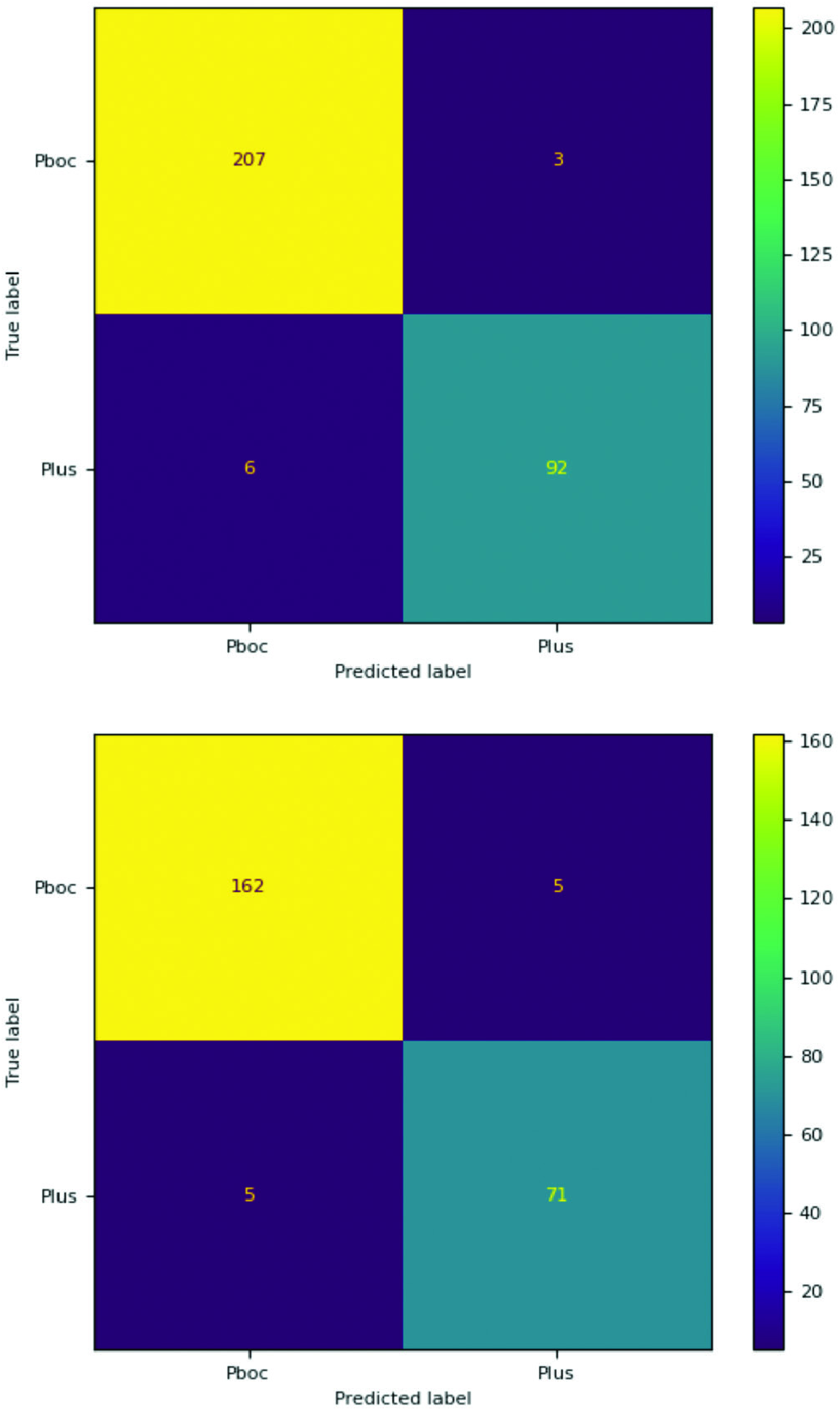

By contrast, combining the predictions from different views results in a much higher classification success in all cases, where accuracy reaches as high as 97.1% for males and 95.9% for females. These results are presented in Table 3 View Table 3 and the corresponding confusion matrices in Figure 2 View Figure 2 .

Grad-CAM heatmaps were produced only for the model showing the highest accuracy in each case (Inception-ResNet V 2 in the case of male dorsal and head lateral images, ResNet 50 in the case of female dorsal images and Inception V 3 in the case of female head lateral images). Visualization of the heatmaps confirms that the models are indeed considering the lizard images for classification and not external features (like human fingers, writings, shadows and other non-lizard elements that appear in some images).

Examples of heatmaps used to discriminate the two classes are shown in Figure 3 View Figure 3 . In dorsal images, the model often uses the middle area of the trunk to discriminate the two classes. Still, the head region is also used (and both regions combined). In female dorsal images, the head is not as frequently used as the trunk, but the portion of the trunk used for discrimination is generally more anterior than in males. In both male and female head lateral images, the area around the ear is the one most frequently used for classification, although this region could be more or less shifted towards the throat in both sexes.

DISCRIMINATION BETWEEN THE NINE GROUPS

Overall, the performance of the different models for classification of the nine classes is worse than in the two-class case. Unlike the experiment involving only P. bocagei and P. lusitanicus , in all analyses considering nine classes there is some evidence of overfitting (see the Supporting Information, Tables S5–S View Table 5 9 for detailed training, validation and testing evaluation scores), which could not be completely overcome by varying the hyperparameters. A summary of the performance of each model is presented in Table 4 View Table 4 .

In general, accuracy ranges from 76.3% for ResNet 50 in female head perspectives to 85.3% for InceptionResNetV 2 in male dorsal views. A striking result is the highly significant difference between male and female image identification accuracy, with consistently higher accuracies in male datasets, which holds for both types of images (P <0.0001 for all comparisons, both for accuracy and F1 score, Mann–Whitney–Wilcoxon test). On the other hand, there are no differences in performance between the two types of images, neither for males nor for females. There are also no major differences between models in classification success. The only significant difference is detected in female head lateral images, in which ResNet50 performs significantly worse than Inception-ResNet V2 (P = 0.0325 for both accuracy and F1-score, Wilcoxon signed rank test). Unlike the two-class case, in which the utility of ensemble models is mostly restricted to the combination of predictions from different perspectives, without important improvements in the within-dataset case, in the nine-class experiment ensemble models combining predictions from the three architectures for each image perspective greatly improve classification accuracy when compared to the best single model (see Table 4 View Table 4 ) .

Using estimates from different views by averaging across the six model predictions improves classification success even further. These results are shown in Table 5 View Table 5 and the respective confusion matrices shown in Figure 4 View Figure 4 . In this case, prediction accuracy reaches as high as 93.5% for males and 91.2% for females.

The distribution of classification metrics according to the species is shown in Table 5 View Table 5 . Taking a deeper look into these classification scores, it appears that several species are fairly well recognizable, with F1 scores above 0.90: this is the case for P. bocagei , P. carbonelli , P. lusitanicus, P. Ʋaucheri s.l., P. Ʋaucheri s.s. and P. Ʋirescens in males, and for the same species except P. lusitanicus in females. The most problematic species is, in both sexes, P. liolepis . Considering confusion matrices, it is noticeable that individuals of this species are often misclassified as P. Ʋirescens (more so in the case of females than males). Noteworthy is that the misclassification between the cryptic P. guadarramae and P. lusitanicus is minimal (7.9% of P. lusitanicus females and 4.6% of males are classified as P. guadarramae and 0 and 1.6% of P. guadarramae females and males are classified as P. lusitanicus ; see Fig. 4 View Figure 4 ).

As for the two-class problem, Grad-CAM analyses show that, typically, the models use lizard – and not other – features for classification. However, even with the visualization tool available, it is not straightforward to understand what the model considers for discrimination. More precisely, the same regions seem to be used to classify distinct species, and it is not evident how differences in these regions are used. The most common patterns for each species are summarized in Tables 6 View Table 6 and 7 View Table 7 (for males and females, respectively).

No known copyright restrictions apply. See Agosti, D., Egloff, W., 2009. Taxonomic information exchange and copyright: the Plazi approach. BMC Research Notes 2009, 2:53 for further explanation.